This day was meant to come. We were all waiting for it to finally hit, and it did.

Here it is: Watch the Skies Trailer

AI is being officially utilized in films on a directorial level. This isn’t using it within the motion graphics realm, where it could be warranted, but rather something entirely different. This “enhancement” completely changes the entire landscape of filmmaking as we know it.

Essentially, an AI startup can take foreign language films and dub them into English, making them sound as if the actors are speaking English. They have AI regenerate their faces as they speak English in a recording studio. It’s all in the video above. It’s only 2:53, you can take the time to watch it.

The “vubbing,” as the creators of the technology call it, views this as a major win in creating greater accessibility for foreign films. Finally, you can show films to your friends without them complaining that they have to read subtitles - even if people are now watching films in their native language with subtitles, as they often do in public places, such as the office, to name one. However, the ability to create greater access to films that would have pushed people out who wouldn’t have enjoyed them in the first place isn’t a good thing. And removing the subtitles of the native speech and nuances is more detrimental to filmmaking than anything else. And it’s on a bigger scale than just the language that’s being used.

Filmmakers are always looking to save a buck. That’s how Hollywood is able to make great movies, better than the fucking $200 Million slop that’s being churned into theaters every weekend. One could argue that they have to spend more money now to compensate the actors, sound crew, and AI company, but that’s a temporary expense. This is the test run. This is them flexing their muscles after a few years in the gym and finally seeing the results come to fruition. This is the beginning. It doesn’t end well.

We start here: having the actors dub over themselves in English. Notice in the video how the older actor is a bit perplexed, and even disturbed, by the new technology while the young actress giggles at how amazing this new creation is. She sees the shiny new thing while the old man notices as something to investigate just a little longer. How far can this technology go? How much are they willing to rely on this technology for communication? That’s what film is — a vessel for a message. The only thing I learned from film school is a theory from Marshall McLuhan called the “The Medium is the Message”. To summarize: the way something is presented is how we perceive it. It’s probably the only useful theory to use in film. Everything else is goobledegook about shot style and tHeMe. How we perceive is how we understand. So where does this leave us?

We’re perceiving the actors speaking English, so what happens if there’s a phrase that can’t be translated over to English? Every language has cultural terms that only perceptible in their own culture. It can only be comparative to something they can use from understanding both cultures trying to find middle ground. That’s the beauty of different cultures. We’re not the same. That’s a great thing! When we try to blend a bunch of different cultures into one thing, then we lose all the history, meaning, and relatability that we had to our upbringings. We lose the past.

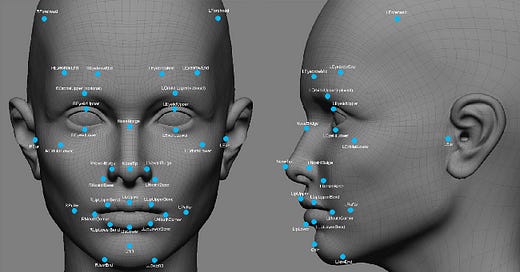

That’s a political sentiment, but that’s just one layer of the onion. The facial recognition software looks uncanny — and doesn’t break the dip of that valley — to the point where you might actually think they’re speaking English. So how far can this go? The answer will be proven in time, but we don’t have to look too deep into our crystal balls.

If you remember the SAG-AFTRA strikes from last year (it didn’t feel like last year), the whole point of the union going on strike was to push against the use of Generative AI in capturing actors and performers to save money. Non-union and union contracts vary by the thousands, but using generative AI takes out almost two departments. Bills were signed and agreements were drawn up, but what happens when the live-action movies with live-action actors don’t make back their budgets? Studios are still eyeing ways to use AI to replace extras. It’s already happening before our eyes. A large chunk of the movies are already replacing on-location sets with massive LED screen backgrounds to replicate the setting. We’re losing the human touch to movies before our very eyes, and it’s not being stopped by anything. Sure, a few bills by Gavin Newsom were made to halt the production, but film will leave Los Angeles if that community continues to crumble. Let’s not even get into the capabilities of Generative AI creating scripts. Writes will become prompt writers, and they won’t even be employed for their creativity anymore. The studio heads committing sexual harassment violations — and God knows what else — will sit in their chairs and wait a few hours for a fresh new script to be popped out of their machines. We all see the Generative AI videos on TikTok, Instagram, and YouTube getting eerily close to looking like the real thing, and maintaining cohesive aesthetics.

I should add an asterisk here. I’ve used AI to help me, but only help. I create and use AI to polish. I don’t rely on it as a crutch or a supplement to a lack of creativity. I use it at as a tool in my toolkit. And that’s how it should be. You can argue “vubbing” is just another tool kit, but the implications are larger than most people are letting on. We’re seeing the shiny facade of glass instead of looking a little deeper into the stripped retail space.

We’re in a wedge moment. The split between two completely opposite realities are becoming ever present. Those who want their human creativity and those who don’t. While there are some that strive to use tools to lift themselves up, there are those who use themselves to lift things up.

I’m hoping I’m wrong, and we’re years from this point, but we’re getting there faster than we think. It’ll be here before you know it. I just hope some people keep creating I will.